News and Resources

We will be adding regular news, updates and resources for anyone to read through, share and use as a source of information. Use the below to navigate to a specific area of interest or simply browse through the materials.

OI Pharmapartners Blog

Can Large Language Models Support Health Economic Modelling?

Publication Title: Artificial intelligence to automate health economic modelling: A case study to evaluate the potential application of large language models

Journal: Clinical and Translational Science (2025)

Link: https://ascpt.onlinelibrary.wiley.com/doi/10.1111/cts.70206

Dr Naylor, of OI Pharma partners, collaborated with industry colleagues on a perspective piece discussing whether large language models (LLMs), like those behind popular generative AI tools, might support elements of health economic modelling. Using a case study format, the authors examine where LLMs perform well (including drafting model structures, generating code, producing documentation) and where current limitations still require expert oversight. It’s a high-level look at where things stand, potentially useful for those working in modelling or market access wanting to get oriented with the emerging conversation around LLMs in HEOR. It’s particularly relevant for teams experimenting with AI tools internally or considering whether AI might help streamline documentation or early-stage model scoping.

Reflections from ISPOR Europe 2025: Applications of AI in HTA and HEOR are here!

Event: ISPOR Europe 2025, Glasgow, Scotland

Link:

https://www.ispor.org/conferences-education/conferences/past-conferences/ispor-europe-2025

Nichola Naylor represented OI Pharma Partners this year at ISPOR Europe 2025 at the end of last year, presenting work on artificial intelligence in acute stroke care, which was recognised within the Top 5% of conference submissions, a great acknowledgement of both the research and the growing relevance of AI-enabled evaluation and AI-decision-support tools themselves in improving clinical pathways.

The event brought together global leaders in HEOR, with a notable shift toward operational rather than conceptual AI. Across sessions, several examples of this stood out:

- Large language models (LLMs) for HTA automation — especially extraction of evidence tables and structured data from multi-indication reports

- Hybrid intelligence approaches, combining human oversight with automated reasoning or retrieval-augmented generation (RAG) systems

- Multi-agent reimbursement simulation, with early examples showing high alignment between AI-generated review questions and real appraisal committee challenges

- Machine-learning-based EQ-5D prediction, where indirect domain-first pipelines outperformed direct index modelling

Practical applications discussed included automated model scoping, structured evidence extraction, literature synthesis, and survival analysis with the consensus that (human) expert governance remains essential for assumptions, input validation and interpretation of uncertainty.

As adoption of decision-support AI accelerates in clinical settings, health economic modelling must continue to evolve, at pace, with it. For teams working in HEOR, ISPOR 2025 reinforces the importance of transparent modelling frameworks and robust validation of AI-assisted pipeline components.

Resources for R and Health Economics

- Signposting Health Economic Packages in R for Decision Modelling (SHEPRD): Introduction | SHEPRD (hermes-sheprd.netlify.app)

- Decision Analysis in R for Technologies in Health: DARTH – Decision Analysis in R for Technologies in Health (darthworkgroup.com)

- R for HTA: R for HTA (r-hta.org)

Resources for AI in Health

- AI & Pharma: Generative AI in the pharmaceutical industry | McKinsey

- AI & Healthcare systems: Harnessing artificial intelligence for health (who.int)

R for HTA annual workshop 2024

Dr Nichola Naylor

For the past ten years, I’ve been passionate about leveraging R for health economic modelling in the field of health technology assessment (HTA). My experience spans various contexts, from guiding clinicians through the development of basic cost-effectiveness models in R to constructing intricate international costing models for the World Health Organization. Recently it was that time of year again – time to attend my favourite workshop. On June 28th in-person & July 1st-2nd online, I attended the “R for HTA” annual workshop hosted by the University of Sheffield SCHARR. It always feels great to find your collective fellow enthusiasts, and whilst the talkers and attendees spanned sectors, disease specialities, skill specialities and countries, there was one common goal … knowledge sharing in R & HTA. I hope to continue on in this spirit by sharing some of my thoughts at and since the workshop for those also interested in this topic…see below.

Takeaways from the Talks:

All of the talks genuinely warrant a read through if you are interested in this topic (see resources provided at the end of this piece). I won’t give an overview of every talk, instead I will summarise some of my personal highlights and takeaways:

- HTA Agencies are Taking the Leap: with talks from HTA Agencies such as UK’s NICE and the Netherlend’s ZIN, their academic collaborators, and pharma company BMS’s submission experience, it feels like we are getting closer to R being accepted in certain HTA settings. More to do though as a collective to help see this through across settings, leading me to…

- The importance of Quality Assurance: something easier to do and more common in R models than MS Excel, but something we tend not to do beyond the usual (do the transition probabilities to 1 at any given stage? Does the outcome make sense with extreme values? Do the output plots from sensitivity analyses and scenario analyses make sense visually?). A new package could help with this, even linking to GPT 4.0 to help visualise your R model in the process (“assertHE” with a youtube tutorial and all – R HTA 24 AssertHE RobSmith TomWard (youtube.com)). Also, keep an eye out for packages you use being maintained on CRAN as some may get decommissioned! (e.g. the “dampack” package).

- Object orientation: Linked to the above, and also based maybe more on discussions I had with attendees beyond the talks and more of a personal learning goal, there’s a movement towards more object oriented coding. This can help with quality assurance, linking with other software developing methods & something I’m going to try and do more of. Although, if you’re starting out, I would still “stick to the scripts”, i.e. functional programming. If this is all starting to read like another language in itself – it is discussed in Hadley Wickham’s Advanced R book: Welcome | Advanced R (hadley.nz) . If you are new – a plug to our previous papers on health economics in R: Health Economic Evaluation Using Markov Models in R for Microsoft Excel Users: A Tutorial | PharmacoEconomics (springer.com) & Extensions of Health Economic Evaluations in R for Microsoft Excel Users: A Tutorial for Incorporating Heterogeneity and Conducting Value of Information Analyses | PharmacoEconomics (springer.com) .

- Making Health Economics Sparkle using Shiny Apps: Technical tips: 1 or 2 speakers mentioned moving beyond hosting their apps on shinyapps.io, instead using AWS (amazon web service), css code in your Shiny App can be adapted to make it look nicer & use shades of a few colours rather than the rainbow. Beyond the technical: talk to stakeholders early and often, even create a specific fake person e.g. “Alice who works in marketing” and imagine her using your app, BUT draw the line somewhere as there will always be comments and sometimes they will not help the user interface, a balance needs to be struck between functionality and features.

- Machine Learning is Making Moves: The conference ended with a fantastic panel discussion on AI for health economics and outcome research. Technological advances are much quicker than the guidance generation so it’s difficult to publish consensus on how to use this, but the presenters had experience in linking R code for HTA with GPT models for various purposes. I asked the panel if there was a risk relying on private company ML models (upping price? stability?) & whether other MLs beyond GPT could/should be used? Answers – “yes” and “yes”. Claude (better) and Gemini were also given as MLs we could link our code to, and luckily the structure of these APIs is similar, so code which calls one into your code should in theory be easily adaptable across them. There we also brief discussion around how Python can now be executed in MS Excel & is what many AI programmes use, so the “R for HTA” umbrella should perhaps merge with a “Python for HTA” umbrella to form a “coding for HTA” canopy ? It reminded me of last years discussion by Devin Incerti on using Python for HTA – R for HTA 2023 Workshop – Devin Incerti (youtube.com) . Whatever you use, the advice was to break it down as much as possible (e.g. feed in specific functions rather than whole scripts), and give context, when using such ML models.

R Packages presented that I’m ‘tracking delivery’ of:

→ AssertHE: an R package to improve quality assurance of health economic models. dark-peak-analytics/assertHE: R package to assist in the verification of health economic decision models. (github.com)

→ easyBIM: an R package for budget impact modelling. r-hta-workshop-2024/content/easyBIM – R HTA Slides/easyBIM – R HTA Slides.pdf at main · r-hta/r-hta-workshop-2024 (github.com)

→ REEEVR: Automated Conversion of Excel to R. r-hta-workshop-2024/content/R-HTA 2024 presentation – Michael ODonnell .pdf at main · r-hta/r-hta-workshop-2024 (github.com)

Current Resources

- The workshop organisers & speakers have provided lots of information, including the slides and links to relevant code through this Github page: r-hta/r-hta-workshop-2024: Open source code from the R-HTA workshop held on 28th June – 2nd July 2024 (github.com)

- Additional information of previous workshops is available on their website: R for HTA annual workshop | R for HTA (r-hta.org)

- Dutch HTA guidelines for R: https://english.zorginstituutnederland.nl/publications/publications/2022/12/15/guideline-for-building-cost-effectiveness-models-in-r

I end on the note that I tried to use Chat GPT and Gemini to help me write this post. Whilst some heavy lifting was done by the Bots (like writing the structure and adding specific summaries for the introduction), they needed quite a lot of spoon feeding to be of maximum use. Which ties into the theme of the panel, and a quote (with slight paraphrasing) from the day “AI will not likely replace all of our jobs, but people not using AI may be replaced at work by those who can use AI”. So I very much look forward to discussing what resources R for HTA, associated organisations and, of course, OI Pharma Partners will produce linking AI and R for HTA in the near future. Happy coding :)

A health economist in a health tech world: LSX Congress 2024 Highlights

Dr Nichola Naylor

Earlier this year (April 2024) I attended the LSX World Congress in London. It was a huge event with 3 large streams: health tech, med tech and biotech. As a health economist who has recently entered in the world of these “techs”, I wasn’t wedded to one particular stream, instead attending talks that seemed to fit my key interests: (1) real world applications of machine learning (ML) & artificial intelligence (AI) within healthcare systems, (2) equity, (3) efficiency and (4) market access (MA) & health technology appraisal, (not in order of importance!). Before I dive into some of my personal takeaways from the conference, it might be helpful to first really delve into some of these concepts and how they can fit together….see below.

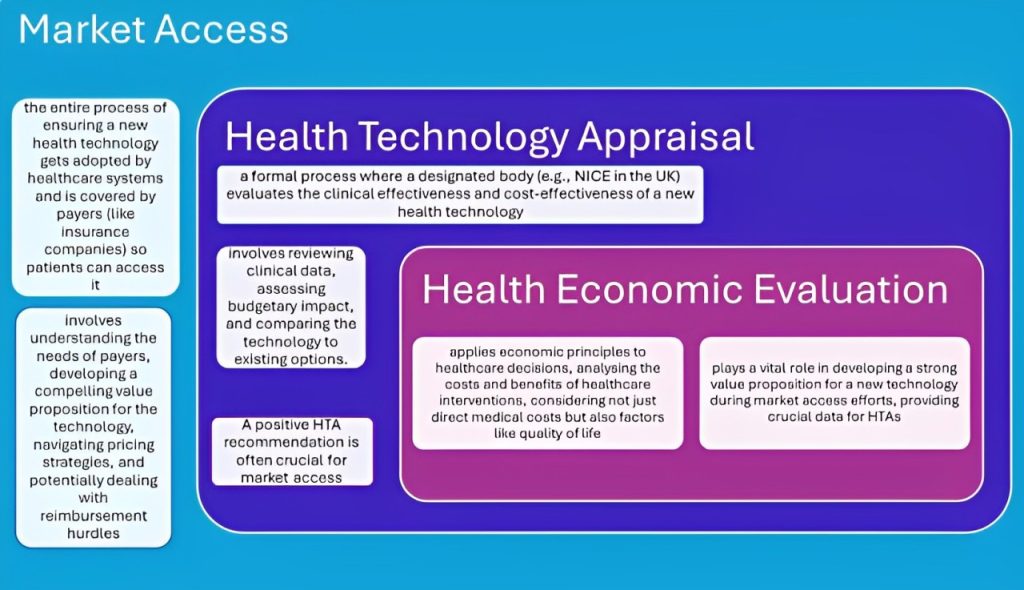

Figure 1. Unscrambling “MA”, “HTA” & “HE”

Figure 1. Unscrambling “MA”, “HTA” & “HE”

Figure 1 is a simplified diagram (created with the help of Gemini), but it highlights how market access (MA), health technology appraisal (HTA), and health economics (HE) are interconnected. By working together, they ensure that innovative health technologies reach patients while also being financially sustainable for healthcare systems. So how do equity and efficiency fit into these?

● Equity: In healthcare, equity can mean ensuring everyone has fair access to quality healthcare, regardless of factors like socioeconomic status or background.

● Efficiency: In health economics, efficiency often means getting the most health benefit for the resources available. It considers not just the direct costs of a treatment but also potential savings from improved health outcomes, like increased productivity.

These concepts can be seen as two sides of the same coin: Market access efforts that prioritise equity ensure new technologies reach underserved populations, promoting fairer health outcomes. However, achieving equity can sometimes come at the expense of short-term efficiency. For example, a new drug may be more expensive than existing options, but if it offers a significant breakthrough for a specific, underserved patient group, the long-term benefits may outweigh the initial cost. HTA processes play a crucial role in finding this balance.

So, using the LSX conference as an anchor, let’s sail through these concepts in the sea of “the techs” and see how they interact …

What can tech do in health?

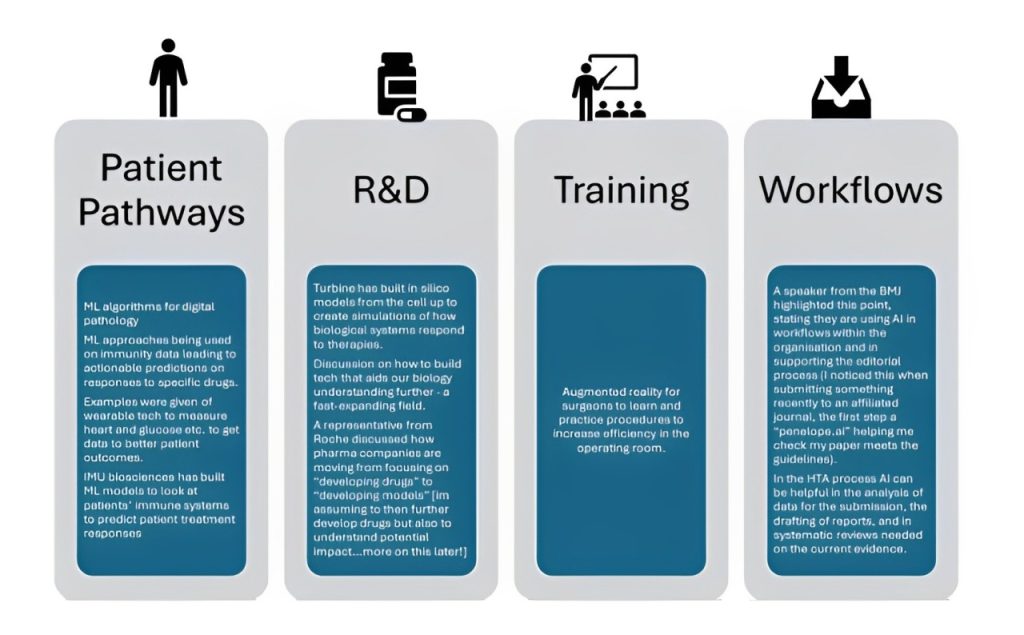

This is by no means an exhaustive list (not even close), but after trawling through my notes, I thought it was worth highlighting some of the examples presented and discussed at LSX to highlight the potential breath of impact, see Figure 2 below.

Equity Considerations:

Figure 2. Examples of Tech Discussed at LSX

Equity Considerations:

Across many of the sessions there was an underlying hum of “we need people to trust the tech” and how potential bias and ethical considerations need to be thought about in order to enhance this trust, across the tech pipeline. There needs to be consideration of potential model bias and ethics in training models, in patient groups used in trials, and then also equity of access to that tech (for example, not all patients may have smartphones). In terms of equity of access, there was also discussion about different reimbursement pathways – for example if there are higher out of pocket costs for consumers, this will likely be inequitable for those with less disposable income. There may also be legal and ethical issues linked to health data, such as abortion and associated women’s health data in the US, these need to be carefully considered.

There was discussion of synthetic control groups, and their potential benefits. This is where researchers identify a group receiving the new treatment (treatment group), analyse historical data from a large pool of similar individuals (potential control group), and using statistical methods, they create a synthetic control group that closely resembles the treatment group in all relevant aspects except receiving the new treatment. And while this approach does offer advantages, there are equity considerations; the quality and representativeness of the data used to build the control group are crucial and if the historical data reflects existing inequalities in access to healthcare, the synthetic control group might not accurately represent how the new treatment would affect a broader population.

So what can us future researchers do? Pfizer mentioned the use of “Ethicara” a bias detection service (an “AI risk assessment for responsible AI model development” – Program Schedule (goeshow.com) ), we also can strive to create and share more diverse data sets, for example (where possible) share data from different trials (with good GDPR practices, with “fair and proportional” data flows, of course). There was an interesting discussion about how people are more willingly now sharing banking data through apps etc., and what can we learn from this. The solutions proposed – promotion of health data literacy and the understanding of potential benefits to the data holders themselves. We need to make it easier for data to be collected in healthcare settings, but also to start building more holistic pictures by bringing in social determinants data where possible and relevant.

Tech can be used to help make it easier for patients to enroll, such as a Roche cancer trial app or Altoida giving patients tech to take home to take measurements outside of site. However, equity considerations on ease and ability of use need to be considered for the intended populations. Tech can also help reduce participant burden, for example Merck uses smart filters for their trial protocols to see what can be reduced/is really needed, and Roche has tech which helps employees feel like they are a patient going through that journey, and be more aware of the whole experience from the patient perspective.

Now, onto a theme I noticed throughout the conference – VALUE PROPOSITIONS FOR STAKEHOLDERS. I know this might read as self-serving (because it is) but I felt as though health economics could fill a huge hole in the health/med tech value proposition pieces. Often speaker answers to participant questions had some element of “you need to demonstrate value”, but value goes beyond initial estimates of ROI for investors, in order to get a good ROI, you need stakeholders (including patients and payers) to understand the efficiency implications on investing and/or up taking the tech…

This is where health economics can come in…

Health economic models can (i) highlight underserved populations and their current cost burden to healthcare systems that could be targeted and (ii) determine cost-effective pricing and potential populations, therefore providing key evidence in potential reimbursement returns. It can do this with uncertain data from literature reviews, trials and real world evidence. Value of information analyses can be done to show which parameters most impact cost-effectiveness results, and such parameters could even then be highlighted as key ones to collect in future observational trials. Beyond this, we need to start understanding the economic value of disruption, change management and wider behaviour changes as a result of tech implementation to have a wider value proposition for use in healthcare.

There were a lot of sessions on market entry of tech products, which of course is a key step in the journey of adoption. However, market access (as noted earlier), is key in having an ongoing ability to operate and sell the tech in a way that benefits all stakeholders, in that new market. Cost-effectiveness from the payer/provider perspective can be a key factor in a successful market access strategy, whilst understanding cost-effectiveness of scalability from the industry perspective can help ensure long term adoption.

A lot of tech companies tout the ability of increasing efficiency in the healthcare system from their product, but then do not investigate what this actually means in terms of money saved and increases in population health. Understanding these links, both in terms of direct cost savings (e.g. reduced unnecessary operations or drugs) and opportunity cost savings (e.g. more free hospital beds), should help further the adoption of truly efficient tech in healthcare.

In terms of incorporating equity impacts, extended cost-effectiveness can also be done, where the distributional effects of tech adoption and use, as well as financial risk protection, can be explored.

My advice to tech companies and payers would be to think about what the overall objectives are, and how these translate to the outcomes you want for any economic analyses. Stakeholders need to talk to each other in each different market. Some markets might be quality-of-life focused, some budget impact, some clinical improvements, some all of the above, which will require different data and different modelling approaches. The earlier health economics is integrated into tech creation, entry, adoption, integration, optimization and scaling, the more efficient and equitable these processes can be across healthcare systems.